Truong14sight (PDF)

File information

This PDF 1.4 document has been generated by LaTeX with hyperref package / pdfTeX-1.40.14, and has been sent on pdf-archive.com on 29/11/2015 at 22:57, from IP address 24.18.x.x.

The current document download page has been viewed 452 times.

File size: 1.82 MB (4 pages).

Privacy: public file

File preview

Teaching English in Rural Africa

through Conversational Robotic Agents

KimYen Truong, Akiva Notkin and Maya Cakmak (PI)

I. INTRODUCTION

The goal of this project is to develop conversational

robotic agents that can carry out foreign language lessons

autonomously or with partial human supervision, for individuals or small groups. Although such robots could be used

for teaching any language to any age group anywhere in the

world, this project focuses on teaching English to children

in countries where access to English-language education is

limited, as part of their primary education (K-6). We are

motivated by recent results demonstrating the effectiveness

of robotic instruction in comparison to other electronic media

[12], [14], [9], [16] and the recent increase in the availability of reliable, low-cost robotic platforms. Our project

will explore several frameworks that allow English teachers

around the globe to remotely program, generate content

for, and control an instructional robot to deliver language

lessons. We will leverage our relationship with a non-profit

Moroccan foundation that builds schools across Africa, to

deploy our system and test different frameworks developed

as part of this project. Our project will impact children in

these schools by facilitating English learning supervised by

qualified teachers, and consequently giving them access to

the world’s largest information resources for self-education.

II. PROBLEM DESCRIPTION

English is one of the most common languages in the world

(both as native and secondary language) and it is arguably

the most useful language to know. Speaking English means

access to abundant information resources, including a large

majority of all online content [26], published scientific papers

[25], and other educational resources, such as Massively

Open Online Courses (MOOCs) [5]. In many countries

English is the main second language (L2) taught in public

schools as early as in kindergarten, particularly in countries

that have been colonized by the British Empire in the

last century [10]. However, in some developing countries,

English has not widely spread as part of public education

curricula. For instance, most African countries have typically

taught French as L2. This has limited the flux of students from these countries to English-speaking international

academic institutions and isolated African institutes from

the larger research community. The schools that wish to

offer English as a second language in these countries, often

have difficulty finding qualified English teachers due to this

historical bias and have difficulty attracting foreign teachers

due to political instability or limited resources.

The authors are with the Computer Science and Engineering Department,

University of Washington, Seattle, WA 98195, USA.

(a)

(b)

Jibo

developer

Kubi

edition that

$399

+ be used

Fig.

1. hacker

Two platforms

will

in this

project: edition

(a) Kubi ($599)

(Revolve

Robotics)

(b) Jibo.

Google and

Nexus

7 tablet $229.00

III. PROPOSED SOLUTION

We propose bringing English-language education to these

countries through low-cost social robots. Our method employs qualified English teachers around the globe to program,

develop content for, and control social robots to deliver

English lessons. To that end, we propose three operation

modes:

• Tele-presence: In this mode a remote teacher will

directly interact with the students through the robot.

The teacher’s live video stream will appear on the

robot’s screen. The teacher will have the ability to

move the robot’s neck to look around the room and

to electronically overlay pictures on the screen. The

teacher will have full control over the content delivery

for the lesson.

• Semi-autonomous robotic agent: The second mode

will employ a supervised-autonomy approach [7] where

the remote teacher will be in-the-loop to make key

decisions about the robot’s behavior but will not control

the robot at a low-level (e.g. moving the neck motors). For instance, the teacher might decide whether

a student’s answer is correct or wrong, but the robot’s

response to either case would be automated procedures

(e.g., facial expressions, spoken responses, gestures) that

are triggered once the teacher makes the call.

• Programmable autonomous robotic agent: The third

mode will involve the robot operating autonomously to

perform simple exercises. The content of the exercise

will be generated by remote teachers who will be given

a simple domain-specific programming language to associate content with robot behaviors including facial

expressions, gaze, and head gestures.

The three approaches have different trade-offs in terms

of (i) the required technology and automation, (ii) types

and interactivity of lectures that can be delivered, (iii) time

involvement and workload on the remote teacher, and (iv)

availability of a stable high-bandwidth internet connection.

Telepresence is currently possible and does not require development of new technologies or new system integration.

It allows for very flexible lesson content but it requires

constant involvement of the teacher and imposes a high

mental load. A semi-autonomous system requires the design

of interpretable and engaging robotic behaviors, but handles

difficult algorithmic problems using human computation.

Although it still requires the teacher to be present, the

mental load might be reduced. The content of lectures is still

flexible but is subject to the constraint of the robotic agent

being able deliver it. Finally, the autonomous system requires

automation of the instructional interaction which has many

open challenges. Nonetheless, we can attain autonomous

interactions that involve simple exercises through the integration of existing face detection, tracking, and recognition,

speech recognition, dialog management, animation, and robot

control technologies. While the lesson content and style is

less flexible for these systems, they allow for efficient use

of labor—remote English teachers can create lesson content

once and the same content can be delivered by multiple

robots in different schools.

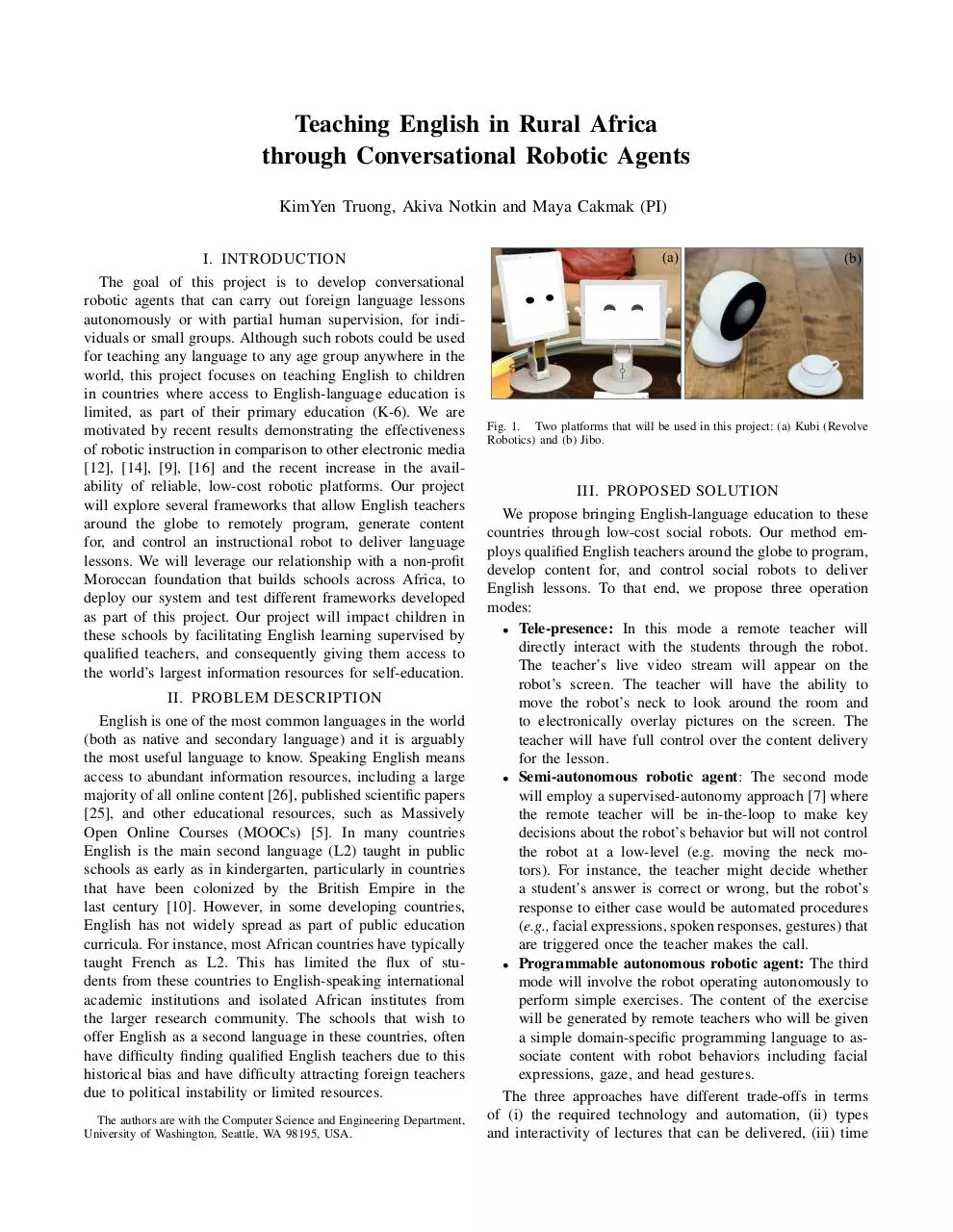

A. Platform

We will implement our methods on two low-cost platforms

(Fig. 1). The first is a Kubi [4] a pan-tilt neck that holds

a tablet head. The Kubi Hacker’s edition costs $399 and

the Nexus Tablet for controlling it costs $229. This set up

has been used by our team as part of preliminary work on

developing interactive behaviors for conversational agents.

The alternative platform is Jibo [3], a kick-starter product

with a pan-tilt head containing a round LCD display, a

camera, microphone, and speakers. The developer edition

of Jibo costs $599. Our team has pre-ordered a Jibo which

will be delivered in the Summer 2015. The two platforms

are comparable in price and functionalities. Both platforms

are programmable and can be used for telepresence. Our

methods will be transferrable between the two platforms.

B. Lesson content

The language lessons for our system will be at a beginner

level and will be based on Duolingo [2], a free language

learning platform that employs bilinguals around the world

to contribute content to new lessons. We will use Duolingo’s

French-to-English lessons. To minimize dependence on the

children’s prior knowledge of French, we will leverage the

additional communication channels afforded by our robotic

platform including facial expressions on the graphical display, head gestures, and gaze, which can be directed towards

different students or objects of interest in the instructional

environment.

C. Context

Based on the size of the considered platforms we will

initially target smaller groups of children, up to 5 at a time.

The lessons will involve the children sitting in a circle around

Fig. 2.

[27].

(a)

(b)

(c)

(d)

(e)

(f)

Examples of other robots used in education [16], [12], [19], [14],

the robot. The lessons will be supervised by a local teacher

or school staff who has been trained on how to start the

system and get it ready for a lecture. This person will also

be in charge of guiding the children to sit around the robot

in an optimal formation for the system to work properly.

IV. RELATED WORK

Our work is motivated by recent studies within the humanrobot interaction community, demonstrating the effectiveness

of robotic instruction. Leyzberg et al. compared the three

course content delivery mediums in large-scale study with

100 participants: (i) a physical robot, (ii) a video representation of the same robot, and (iii) a disembodied voice [16].

They found that participants who received lessons from the

physically-present lesson outperformed the participants in

the other two conditions in learning to solve a particular

type of problem. Their follow-up work demonstrated further

learning gains with personalization of content to the particular students [17]. Corrigan et al. studied the automatic

detection of student engagement so as to personalize pace

and content to the individual students [9]. In a study involving tele-operated mobile robots administering English

lessons in a Korean classroom, Lee et al. demonstrated that

robot-assisted language learning significantly increased the

students’ satisfaction, interest, confidence, and motivation

[14], [13]. Kanda et al. performed an 18-day field trial with

an English speaking robot in a Japanese elementary school,

highlighting the potential drop in the novelty effect of a

robot that is constantly on, but nonetheless demonstrating

vocabulary learning outcomes for students who had sustained interactions with the robot [12]. Tanaka and Matsuzoe

demonstrated that a peer robot (rather than a teacher robot)

might be more effective in promoting learning [23].

Researchers have also started to explore the idea of using

tele-presence in education, some with a particular focus on

language learning. For instance, Tanaka and Noda explored

the idea of connecting two classrooms between Japan and

the US [24]. Yun et al. developed a tele-education platform

[28] and investigated the design of easy to use interfaces

for teachers to remotely control such robots [27]. Others

have looked into the use of telepresence robots by children

to participate in a classroom while they are hospitalized or

during bed rest [22].

Although we do not present a comprehensive survey in this

document, several other lines of research are also relevant for

our work, including (i) intelligent tutoring systems [8], (ii)

second language acquisition (SLA) [18], and (iii) computer

assisted language learning [15]. Our work is informed by

findings revealed and methods developed within these fields.

In addition, other areas within human-robot interaction, including gaze [20], human-robot dialog management [6], and

facial expression animation [21] have important implications

that inform the design of our system.

A. Expected contributions

Previous work presents examples of systems resembling

the one proposed in this project and motivate the use of

robots for the problem addressed (Sec. II). Nonetheless,

researchers in this area acknowledge that they have only

scratched the surface of a problem with many challenges

and great potential for impact [11], [13]. Our work will

push the envelope in the use of low-cost robots, compared to

robots that have previously been used in similar contexts (all

above a $2K price point). Rather than a short-term trial as

in some of the previous work, we will aim for a permanent

deployment with multiple generations of students over the

years, yielding new types of empirical data. Finally, our

work will result in a new framework for using alternative

techniques with different levels of autonomy (Sec. III) in a

complementary fashion, to optimize for student learning and

efficient use of the remote teacher’s time and effort.

V. PROPOSED ACTIVITIES

Our implementation will leverage preliminary work on

the development of a conversational agent using the Kubi

platform. This includes existing routines for facial expressions and animations, head gestures, face tracking, open

ended speech dictation, and text to speech. The main development activities in the proposed project include (i)

the implementation of the teachers’ user interfaces for the

telepresence and semi-autonomous modes, and (ii) the design

and implementation of the simple programming language to

allow scripting of autonomous delivery of lessons. We will

also transfer our implementations to the Jibo platform and

improve the generalizability of our implementation as part

of this process.

Before deployment we will test our system in two ways.

We will evaluate the robustness of our system within microinteractions through in-lab studies by recruiting studentteacher pairs. The content of these interactions will be staged

to ensure diversity of test cases. Next, we will do a short-term

mock deployment of our system as part of an elementary

school summer program at our institution involving a Frenchlanguage learning session with the robot over the course of

two weeks. The content for this evaluation will be based

on DuoLingo’s English-to-French lessons, which are very

similar to the French-to-English ones. The teacher during

these tests will be one of our team members.

For the actual deployment of our system we will partner with the Benjelloun-Mezian Foundation [1] based in

Casablanca, Morocco and led by BMCE bank chairman

Mr. Othman Benjelloun and his wife Dr. Leila MezianBenjelloun. The foundation dedicates a large portion of its

philanthropy activities to the promotion of education at all

levels, both in Morocco and the rest of Africa. It has built

a number of elementary schools in rural parts of Morocco

and it provides scholarships to Moroccan students for higher

education in international institutions. The PI’s relationship

with the foundation is a result of the Benjellouns’ fascination

with robots. In the Summer of 2013, during the PI’s postdoctoral research at Willow Garage, Inc., Mr. Benjelloun

purchased a PR2 robot made by the company for the headquarters of his bank in Casablanca. The PI was part of the

team that travelled to Morocco to deploy this robot. The

problem addressed in this proposal, as well as the idea for

a robotics-based solution, was in fact given to the PI by

Dr. Mezian-Benjelloun who had read about similar systems

that were developed and deployed in Japan and Korea [12],

[28], [14]. The PI has maintained a connection with the

family afterwards and has recently deployed another PR2

robot in their son’s US home. Hence, in this project, we

plan to do our first deployment in one of the schools built

by the foundation and will rely on the family’s support and

influence to facilitate the deployment.

The teacher for the deployed system will initially be one

of our team members but over time we will work to recruit

volunteers from our local community. We hope to leverage

other partnerships and collaborations across the University

of Washington campus, including the UW language learning

center, the UW center for game science and the UW Change

and ICTD (Information and Computing Technology for the

Developing World) groups.

A. Timeline

We plan to complete our implementation by the beginning

of Summer 2015, carry out the robustness tests and the mock

deployment during the Summer of 2015 and implement any

necessary revisions based on these tests, and perform the

final deployment in Morocco in October 2015.

B. Budget

We ask for a total of $2000 support for the purchase of

the platform that will be deployed in Morocco (Kubi: $399

+$229 or Jibo: $599) and to cover travel expenses for one

team member to go to Morocco for the deployment and

training of the staff (approximately $1100 for the flight and

$300 for accommodations).

C. Project team

Our team has three members: (i) an undergraduate student who is taking the lead in performing the proposed

research as part of her honors thesis at the University of

Washington, (ii) a high-school intern who is helping with the

implementation of our system, and (iii) an assistant professor

(PI) supervising the project. The PI has extensive experience

developing robust interactive robots and conducting humanrobot interaction experiments, and she has had several realworld, one-of-a-kind robot deployment experiences. Short

biographies of team members are given below.

KimYen Truong is a senior undergraduate in Computer

Science & Engineering at the University of Washington. Her

research interests are in socially assistive robotics. She has

been working at Dr. Cakmak’s lab for six months on designing interactions for a tablet-based table-top conversational

robot with the Kubi platform. http://kimyen.org/

Akiva Notkin is a junior at Ingraham High School in Seattle,

Washington. His research interests are at the intersection

of human perception and artificial intelligence. He is an

experienced Java and Android device programmer and he

spent the summer of 2014 interning at Dr. Cakmak’s lab

working on facial expressions for a tablet-based table-top

robot.

Maya Cakmak is an Assistant Professor at the University of

Washington, Computer Science & Engineering Department,

where she directs the Human-Centered Robotics lab. She

received her PhD in Robotics from the Georgia Institute

of Technology in 2012, after which she spent a year as a

post-doctoral research fellow at Willow Garage—one of the

most influential robotics companies in he world. Her research

interests are in human-robot interaction, end-user programming and assistive robotics. Her work aims to develop robots

that can be programmed and controlled by a diverse group

of users with unique needs and preferences, to do useful

tasks. Her work has been published at major Robotics and

AI conferences and journals, demonstrated live in various

venues and has been featured in numerous media outlets.

http://www.mayacakmak.com

http://hcrlab.cs.washington.edu

R EFERENCES

[1] Bringing schools and community development to rural morocco:

http://www.synergos.org/globalgivingmatters/

features/0403medersat.htm.

[2] Duolingo: www.duolingo.com/.

[3] Jibo: http://www.myjibo.com/.

[4] Kubi, revolve robotics: https://revolverobotics.com/.

[5] The global directory of moocs providers: http://www.moocs.

co/, November 2012.

[6] Dan Bohus and Eric Horvitz. Facilitating multiparty dialog with

gaze, gesture, and speech. In International Conference on Multimodal

Interfaces and the Workshop on Machine Learning for Multimodal

Interaction, page 5. ACM, 2010.

[7] Gordon Cheng and Alexander Zelinsky. Supervised autonomy:

A framework for human-robot systems development. Autonomous

Robots, 10(3):251–266, 2001.

[8] Albert T Corbett, Kenneth R Koedinger, and John R Anderson. Intelligent tutoring systems. Handbook of human-computer interaction,

pages 849–874, 1997.

[9] Lee J Corrigan, Christopher Peters, and Ginevra Castellano. Identifying task engagement: Towards personalised interactions with educational robots. In Affective Computing and Intelligent Interaction

(ACII), 2013 Humaine Association Conference on, pages 655–658.

IEEE, 2013.

[10] Education First. English proficiency index: http://www.ef.com/

epi, 2011.

[11] Jeonghye Han. Emerging technologies:robot assisted language learning. Language and Learning Technology, 16(3):1–9, 2012.

[12] Takayuki Kanda, Takayuki Hirano, Daniel Eaton, and Hiroshi Ishiguro.

Interactive robots as social partners and peer tutors for children: A field

trial. Human-computer interaction, 19(1):61–84, 2004.

[13] Sungjin Lee, Hyungjong Noh, Jonghoon Lee, Kyusong Lee, and

Gary Geunbae Lee. Cognitive effects of robot-assisted language

learning on oral skills. In INTERSPEECH 2010 Satellite Workshop

on Second Language Studies: Acquisition, Learning, Education and

Technology, 2010.

[14] Sungjin Lee, Hyungjong Noh, Jonghoon Lee, Kyusong Lee, Gary Geunbae Lee, Seongdae Sagong, and Munsang Kim. On the effectiveness

of robot-assisted language learning. ReCALL, 23(01):25–58, 2011.

[15] Michael Levy. Computer-Assisted Language Learning: Context and

Conceptualization. ERIC, 1997.

[16] D Leyzberg, S Spaulding, M Toneva, and B Scassellati. The physical

presence of a robot tutor increases cognitive learning gains. In

Proceedings of the 34th Annual Conference of the Cognitive Science

Society. Austin, TX: Cognitive Science Society, 2012.

[17] Daniel Leyzberg, Samuel Spaulding, and Brian Scassellati. Personalizing robot tutors to individuals’ learning differences. In Proceedings

of the 2014 ACM/IEEE international conference on Human-robot

interaction, pages 423–430. ACM, 2014.

[18] A-M Masgoret and Robert C Gardner. Attitudes, motivation, and

second language learning: a meta–analysis of studies conducted by

gardner and associates. Language learning, 53(1):123–163, 2003.

[19] Javier R Movellan, Fumihide Tanaka, Bret Fortenberry, and Kazuki

Aisaka. The rubi/qrio project: origins, principles, and first steps. In

Development and Learning, 2005. Proceedings. The 4th International

Conference on, pages 80–86. IEEE, 2005.

[20] Bilge Mutlu, Jodi Forlizzi, and Jessica Hodgins. A storytelling robot:

Modeling and evaluation of human-like gaze behavior. In Humanoid

Robots, 2006 6th IEEE-RAS International Conference on, pages 518–

523. IEEE, 2006.

[21] Tiago Ribeiro and Ana Paiva. The illusion of robotic life: principles

and practices of animation for robots. In Proceedings of the seventh

annual ACM/IEEE international conference on Human-Robot Interaction, pages 383–390. ACM, 2012.

[22] Kieron Sheehy and Ashley A Green. Beaming children where they

cannot go: telepresence robots and inclusive education: an exploratory

study. Ubiquitous Learning: an international journal, 3(1):135–146,

2011.

[23] Fumihide Tanaka and Shizuko Matsuzoe. Children teach a carereceiving robot to promote their learning: Field experiments in a classroom for vocabulary learning. Journal of Human-Robot Interaction,

1(1), 2012.

[24] Fumihide Tanaka and Tomoyuki Noda. Telerobotics connecting

classrooms between japan and us: a project overview. In RO-MAN,

2010 IEEE, pages 177–178. IEEE, 2010.

[25] Daphne

van

Weijen.

The

language

of

(future)

scientific

communication:

http://www.

researchtrends.com/issue-31-november-2012/

the-language-of-future-scientific-communication/,

2012.

[26] W3Techs.com.

Usage of content languages for websites:

http://www.synergos.org/globalgivingmatters/

features/0403medersat.htm, December 2011.

[27] Sang-Seok Yun, Munsang Kim, and Mun-Taek Choi. Easy interface

and control of tele-education robots. International Journal of Social

Robotics, 5(3):335–343, 2013.

[28] Sangseok Yun, Jongju Shin, Daijin Kim, Chang Gu Kim, Munsang

Kim, and Mun-Taek Choi. Engkey: tele-education robot. In Social

Robotics, pages 142–152. Springer, 2011.

Download Truong14sight

Truong14sight.pdf (PDF, 1.82 MB)

Download PDF

Share this file on social networks

Link to this page

Permanent link

Use the permanent link to the download page to share your document on Facebook, Twitter, LinkedIn, or directly with a contact by e-Mail, Messenger, Whatsapp, Line..

Short link

Use the short link to share your document on Twitter or by text message (SMS)

HTML Code

Copy the following HTML code to share your document on a Website or Blog

QR Code to this page

This file has been shared publicly by a user of PDF Archive.

Document ID: 0000318093.